Feature detection and targeted advertising 2019, United Kingdom, London

User reactions to the use of targeted-advertising based on face-detection and feature-detection in the wild

This report explores the use of face detection in the world of advertising and how such technology is used to direct personalised advertisements to people in their everyday city environments.

This project examines the ways in which people react and respond when they are exposed to and confronted with targeted advertising based on predicted data-points gathered about them directly from an image of their face, obtained only seconds beforehand. It seeks to understand how people feel when they become aware that their image has been scanned for data-points which lead directly to the selection of an advertisement that the algorithm deems most suitable for them. An installation was created by the researcher, using face detection technology and machine-learning. Participants faces are captured by a video camera and the image of their face is analysed and data-points of the participant are predicted. These data-points are displayed on a screen overlaying the photograph of the face from which they derive. Based on these data, a targeted advertisement is selected and displayed. Data is gathered on the responses to the display via questionnaires and informal interviews as well as video footage of the behaviour of the participant. The results of this research show that there is a high level of awareness of this technology and that those that are more aware are more positive about seeing their image and data-points but this has little impact on their reaction to seeing the personalised advertisement. A majority of people feel negatively about the selected advertisements. The age of the participant proved to be a factor, in general people who are younger tend to feel more positive about the use of face detection in advertising and those who are older are more resistant.

Details

Team members : Thomas Hamel Cooke

Supervisor : Associate Professor: Ava Fatah gen Schieck

Institution : Bartlett School of Architecture, University College London

Partners : Developed as part of Body as Interface | City as Interface studio, MSc Architectural Computation, Bartlett School of Architecture, University College London

Descriptions

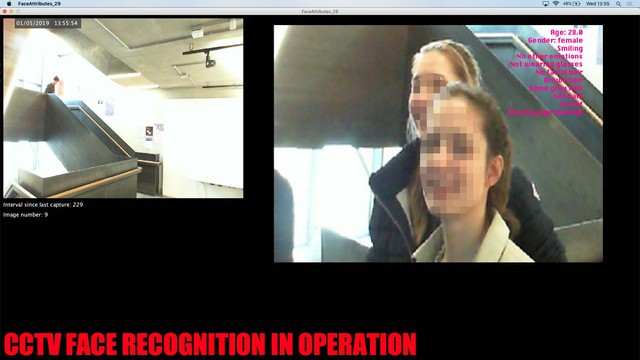

Technical Concept : An algorithm was developed utilising video within the programme and from this video footage is programmed to pick out faces (using OpenCV, utilising Object Detection based on Haar feature-based cascade classifiers) to send as still images for analysis and feature recognition through machine-intelligence. The library video.processing was utilised to capture video data from the camera. The programme displayed the same image that is sent to Microsoft (using Face API for feature-detection) for analysis; the predicted details that are returned are translated into descriptive details to overlay the image on the screen. The algorithm selects a relevant advertisement based on the most prevalent of the person’s features, as predicted by machine-intelligence and this advertisement is displayed back to the participant.

Visual Concept : The passer-by is recorded in real-time and face detection technology locates their face. There's a section of screen showing the video being taken. The face detected appears with a blue box around so the participant can see that face detection is in progress. A snap-shot of the face is taken and displayed on another part of the screen and sent via the internet to a server which analyses the features using machine-intelligence returning predicted data-points relevant to the person whose image has been captured. The data-pts are translated into information to be displayed over the top of the image of the face from which they derive. These details include age, gender, hair, glasses, beard, moods, makeup, and headgear. After a few seconds, the image of the face is replaced by an ad designed for the purpose to appeal to the user based on data-points gathered from analysis of their image.

Credits

Thomas Hamel Cooke

Thomas Hamel Cooke

Thomas Hamel Cooke

Thomas Hamel Cooke

Thomas Hamel Cooke